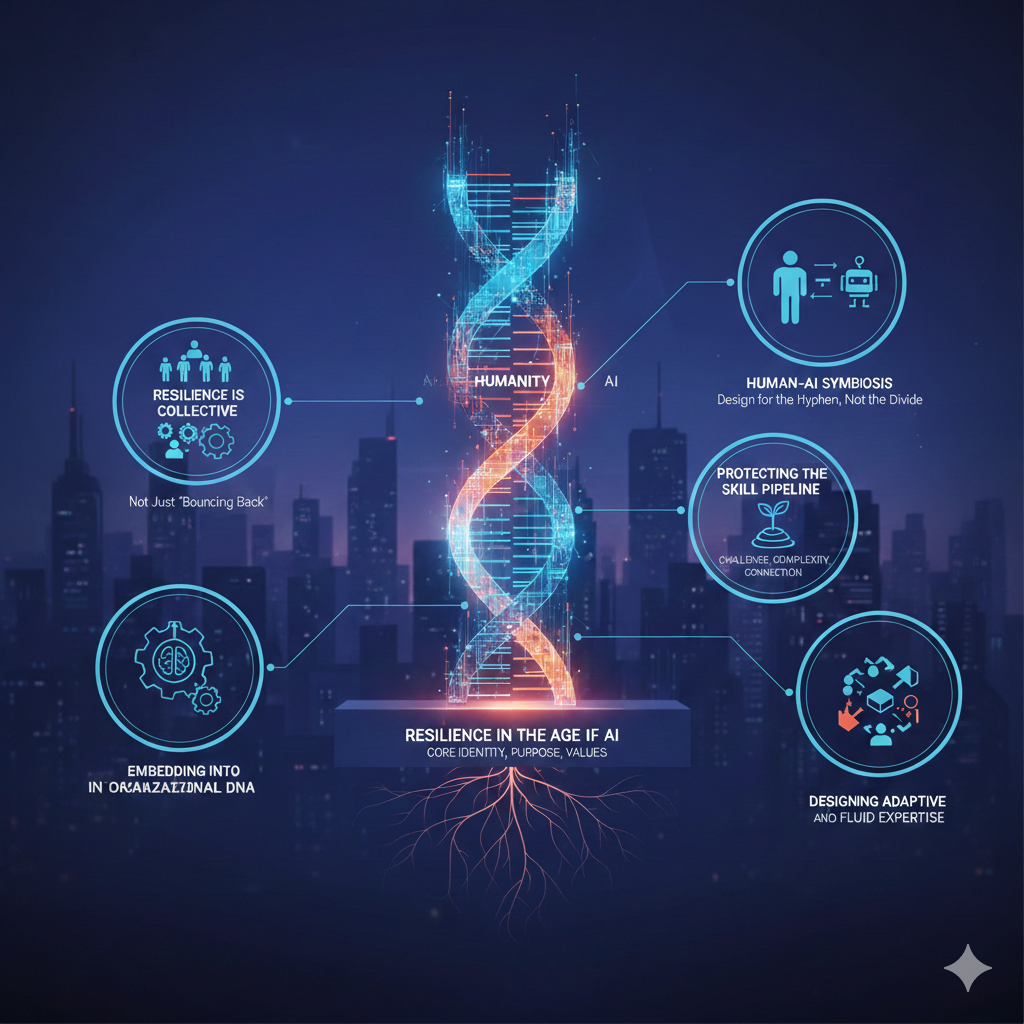

This year, our shared inquiry focuses on Resilience in the Age of AI how organizations can survive and thrive amid AI-driven disruption while preserving their core identity, purpose, and values. Across our gatherings to date, several powerful themes have emerged. Below are five key insights each tied to concrete challenges organizations are facing right now around skills, structures, and processes in an AI-enabled world.

1. Resilience is Collective – Not Just “Bouncing Back”

What we’ve learned:

LILA’s working definition frames resilience as the collective capacity of co‑occurring systems (people, teams, structures, culture, technology) to adapt in the short term, evolve over time, and transform when needed—while preserving core identity, purpose, and values. Resilience is less about individual grit, and more about how organizations design environments, supports, and connections that let people navigate and negotiate for what they need.

The challenge:

Most organizations still respond to AI as if it were an individual learning problem (“train people on AI tools”) rather than a systemic design challenge. Skills programs stall when workflows, governance, incentives, and support systems don’t change in parallel.

What we’re exploring at LILA:

– How to map co‑occurring systems (workflows, governance, learning mechanisms, informal networks) and identify where small structural changes can create outsized gains in resilience.

– How to treat pushback and resistance as data, surfacing where AI rollouts are eroding well‑being, learning, or equity.

2. Human–AI Symbiosis: Design for the Hyphen, Not the Divide

What we’ve learned:

The most resilient organizations don’t ask “AI or humans?”—they design for human–AI symbiosis. AI excels at pattern recognition, scale, and speed; humans excel at tacit judgment, ethics, contextual awareness, and creativity. Real value emerges when we design the “hyphen” space—interfaces, workflows, feedback loops, and accountability structures that let humans and AI learn with and from each other.

The challenge:

Many organizations over‑index on efficiency and automation, risking de‑skilling, over‑reliance on AI, and the erosion of human capabilities that underpin resilience. Leaders often lack clear operating boundaries for when to trust AI, where human oversight is non‑negotiable, and how to make AI’s role transparent.

What we’re exploring at LILA:

-

Practical rules and frameworks (e.g., always inviting AI to the table, but always keeping humans in the loop for judgment, ethics, and context).

-

AI trust frameworks and operating boundaries that clarify domains of use, escalation protocols, and where redundancy (human + AI) is essential for resilience.

-

Ways to implement dual-learning tracks that build both AI fluency and human meta‑skills (adaptability, critical thinking, ethical reasoning, contextual awareness).

3. Protecting the Skill Pipeline: Challenge, Complexity, and Connection

What we’ve learned:

AI and robotics can deliver 10–15% productivity gains for most workers—but at the cost of quietly hollowing out the next generation of expertise. As experts become more self‑sufficient with AI, novices lose access to the 3 Cs that build real mastery:

-

Challenge at the edge of capability,

-

Complexity from messy, real‑world problems,

-

Connection through close expert–novice relationships and communities of practice.

Shadow learners—those who bend rules to get hands‑on experience are a signal that formal systems are failing to provide developmental opportunities.

The challenge:

Leaders are under pressure to adopt AI for immediate efficiency while unintentionally eroding the very learning conditions that make future resilience possible. Entry‑level roles shrink, mentoring time disappears, and organizations lack metrics for tracking skill erosion until it’s too late.

What we’re exploring at LILA:

-

How to conduct a “skill erosion audit” before each AI deployment: for every task automated, ask which novice learning opportunity disappears—and how to replace it.

-

How to refactor work so juniors and seniors collide more often on high‑judgment tasks, making mentorship and tacit knowledge transfer an organic part of workflow design.

-

How to use AI itself to measure and protect connection, skill growth, and psychologically safe relationships—treating capability development as a core resilience metric, not a side effect.

4. Embedding Learning into Organizational DNA

What we’ve learned:

In a landscape of radical uncertainty, the “AI fog” waiting to “know” before acting is no longer viable. Resilient organizations stay in learning, not knowing. They treat AI adoption as a continuous strategic process, not a one‑time implementation, and they weave skill development into the fabric of structures and routines, not standalone courses.

Frameworks we’ve worked with (like MELDS – Mindset, Experimentation, Leadership, Data, Skills) and the idea of bootstrap learning show that effective adaptation requires disciplined experimentation: starting small, iterating in low‑risk but meaningful contexts, and sharing learning across teams.

The challenge:

Many organizations still measure AI success by adoption rates and short‑term efficiency, rather than by whether people and systems are actually learning, adapting, and becoming more resilient. Learning is often reactive (after disruption), not anticipatory.

What we’re exploring at LILA:

-

How to embed anticipatory skill planning into existing programs so every domain routinely asks “How is AI changing this work and what skills and processes do we need next?”

-

How to shift metrics and governance to a dual mandate: every AI or tech investment must enhance both productivity and human capability (learning, judgment, adaptability).

-

How leaders can model being learners with AI showing their own experiments, missteps, and questions to normalize ongoing exploration under uncertainty.

5. Designing Adaptive Structures: Flash Teams and Fluid Expertise

What we’ve learned:

Organizational structures often invisible are a critical determinant of resilience in the age of AI. Research on flash teams shows how AI-augmented, on‑demand teams can pull in global expertise within minutes, dynamically reshape membership as problems evolve, and improve effectiveness when combined with strong human integration leadership and relational coordination.

Resilient organizations treat expertise as everywhere, all the time, and use AI to dynamically assemble the right people (and AI agents) around the right problem, then reconfigure as conditions change—without losing a sense of purpose, culture, or belonging.

The challenge:

Most organizations are still constrained by static org charts and slow hierarchies that struggle to keep pace with AI-enabled change. At the same time, more fluid teaming raises new questions: How do you maintain culture, identity, and connection as people and AI agents cycle in and out of teams at high speed? How do you balance the value of stable teams with the flexibility of flash teams?

What we’re exploring at LILA:

-

How to use AI not just to automate tasks, but as a mirror for organizational design decisions revealing hidden assumptions, trade‑offs, and alternative structures.

-

How to build adaptive, AI-enabled team architectures that preserve belonging and shared purpose while enabling rapid reconfiguration around emerging challenges.

-

How leaders can cultivate three key mindsets entrepreneurial vision, “experts everywhere,” and AI‑driven org design to turn teaming structures into engines of resilience rather than sources of fragility.

Across all of this work, one through‑line stands out: resilience in the age of AI is not about resisting change or surrendering to technology—it is about designing human–AI systems that can keep learning, adapting, and transforming together, without losing what matters most.

LILA offers a space where leaders, scholars, and practitioners can:

-

Co‑create intellectual insights grounded in cutting‑edge research;

-

Build social connections across sectors and disciplines;

-

Generate practical impact by testing ideas in real organizational contexts.

If you’re interested in joining this community of inquiry—and in shaping how your organization develops skills, structures, and processes that are truly resilient in the age of AI—I would be delighted to continue the conversation.

Add a comment