Anna-Sophie Ulfert-Bank’s session centered on unraveling the complexities of trust within our human-AI collaborations. She shed

Anna-Sophie Ulfert-Bank’s session centered on unraveling the complexities of trust within our human-AI collaborations. She shed

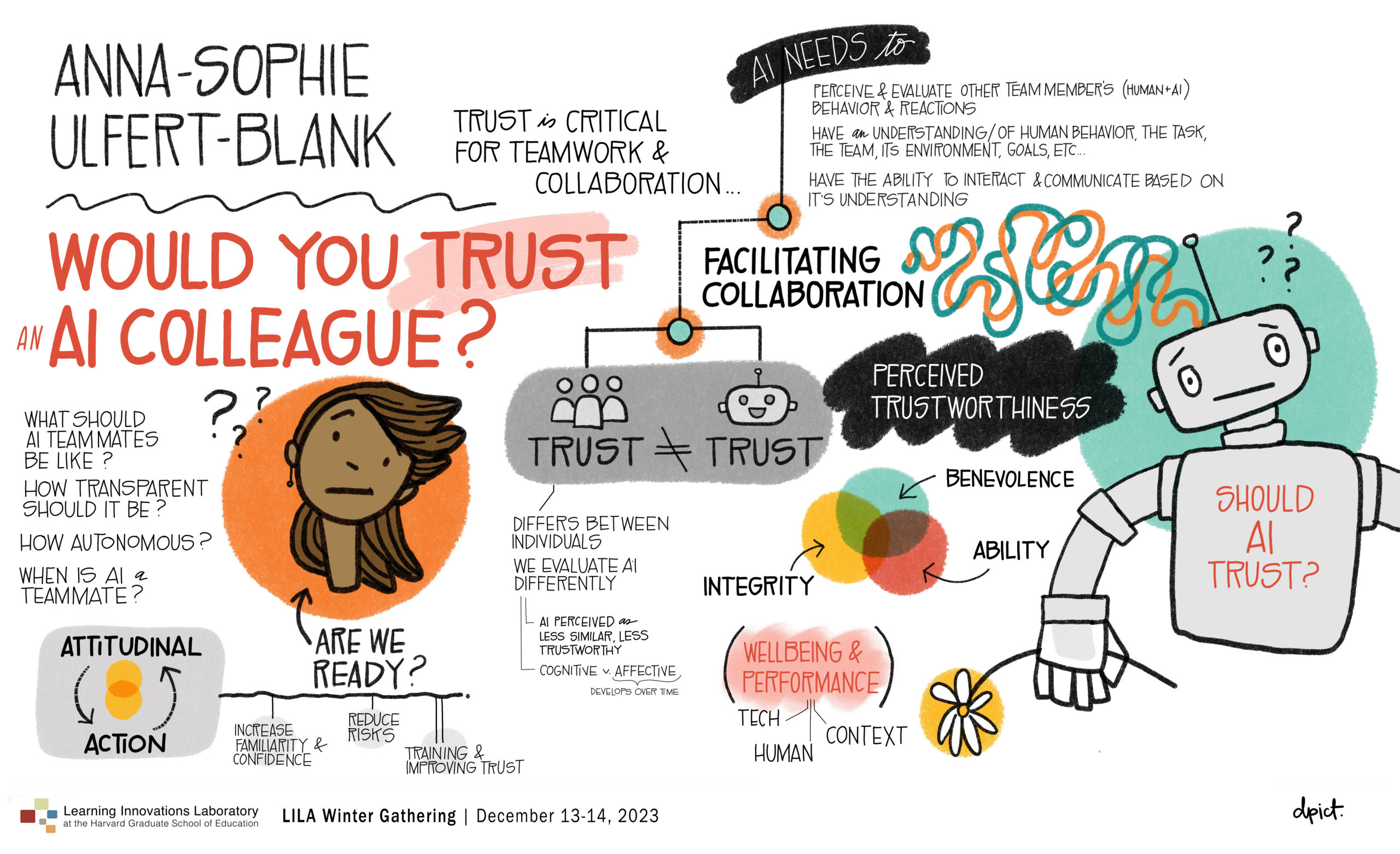

light on the challenges and intricacies of defining and measuring trust in dynamic team settings, and shared her evolving research into the nature of trust within AI teams, considering factors ranging from

technology to human dynamics and context.

The Importance of Trust

Collaboration, whether human-to-human or human-AI, hinges on trust. But is the trust we discuss in these different settings the

same? The answer, for now, remains elusive. The trust found within human-AI collaboration is a specific beast. It involves

humans grappling with vulnerability. Can we really rely on AI teammates? Too much trust can be detrimental, while too little

can hinder engagement. To navigate this complex landscape, we need a deeper understanding of trust itself.

Human-AI relationships introduce new layers of complexity, where factors like training, expertise, uncertainty, and reliability all play a role. Can we simply apply what we know from human-human teams, or do we need new theoretical frameworks?

So, how do we decide whether or not we can trust an AI teammate? Anna-Sophie studied this in human-AI consulting teams before the rise of chatbots like ChatGPT. Interestingly, trust differed significantly between individuals. It seemed to stem from a subjective belief about how well the AI performed its tasks, with AI often perceived as less similar and less

trustworthy than human team members. Our preconceptions about AI play a powerful role in shaping our experience.

While interpersonal and team trust within human-only teams tends to be higher, identifying as a team in mixed human-AI settings can actually have a positive impact on performance, even if individual trust in the AI remains lower. This underscores the importance of fostering a collaborative environment where humans and AI work together towards shared goals.

Finding the Right Balance in AI Autonomy

Excessive AI autonomy can be a double-edged sword. While it can boost efficiency, it can also decrease human attention, situational awareness, and acceptance of the AI’s decisions. Human team members generally prefer retaining some control, but their preferences vary greatly. Interestingly, humans base their evaluations of AI autonomy on how “arousing” or

exciting they find it, suggesting a complex interplay between trust, control, and engagement.

The Fuzzy Boundaries of AI Teammates

Determining when an AI truly becomes a teammate is a nuanced question. Factors like system characteristics, individual differences within the team, and overall team composition all play a role. Our tendency to anthropomorphize, even naming our tools, further blurs the lines.

Can AI Trust Too?

From the technical side, seamless human-AI collaboration requires AI to understand human behavior, team dynamics, and the specific environment.This involves teaching AI about ontology – the very meaning behind words and concepts – at a micro level to enable meaningful interaction and trust. Anna-Sophie emphasizes that trust in human-AI teams is a two-way street. Her research delves into the concept of trust between machines, exploring how both humans and AI have perceptions, evaluations, and behaviors that influence trust within the team constellation. By understanding these intricate micro-level interactions, we can pave the way for smoother, more trusting collaborations.

Ultimately, effective human-AI collaboration must focus on human well-being and performance. Creating a psychologically safe environment where anxieties are addressed and open feedback loops exist is crucial for building trust and ensuring a positive and productive working environment for our future with our new AI colleagues.